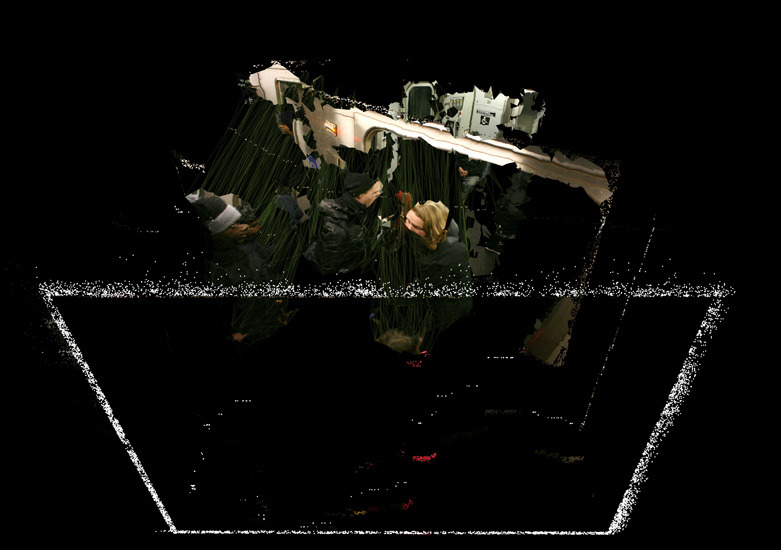

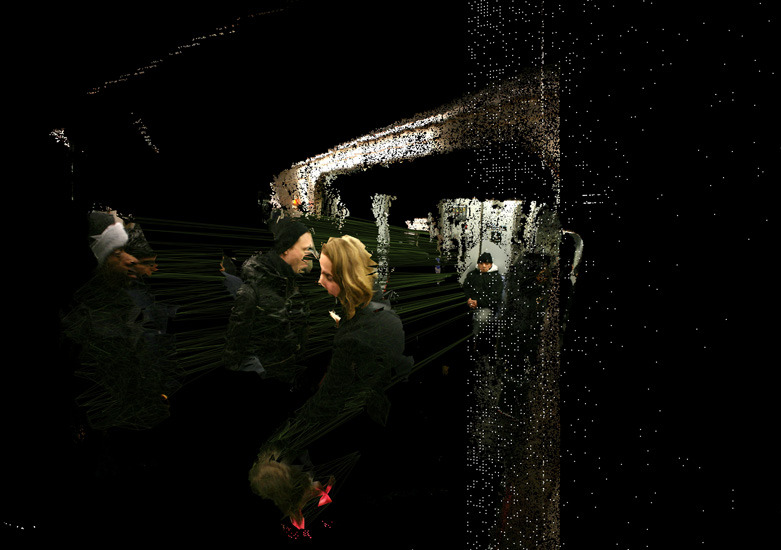

Depth Editor Debug | Print Series, Custom Software

Series of Five

Only six weeks after the tragic 2005 subway bombings in London, New York's Metro Transit Authority (MTA) signed a contract with the high-tech defense and military technology giant Lockheed Martin for the promise of a high-tech surveillance system driven by computer vision and artificial intelligence. The security system failed miserably and the contract collapsed under lawsuits. As a result, thousands of security cameras in the New York subway stations sit unused and the public is kept safe through old-fashioned methods of law enforcement. [via WNYC].

The Depth Editor Debug series imagines what the world would have looked like through the eyes of that system.

This project created the first software prototype and proof of concept for RGBD Toolkit, an open-source volumetric capture toolset, and ultimately Depthkit.

-

In October of 2010, science fiction author Bruce Sterling gave the keynote speech concluding the Vimeo Festival + Awards in New York. He described his prediction of the future of imaging technology. Regarding how a camera of the future may function, Sterling said:

"It simply absorbs every photon that touches it from any angle. And then in order to take a picture I simply tell the system to calculate what that picture would have looked like from that angle at that moment. I just send it as a computational problem out in to the cloud wirelessly."

One month later, Microsoft released their new video game controller, the Xbox Kinect. Kinect is unique in that it uses a depth sensing camera and computer vision to sense the position and gestures of gamers. Visualizations of space as seen through Kinect's sensors can be computed from any angle using 3D software. When the drivers were made available, online creative software developer communities were flooded with artistic and novel interpretations of this data. The images were often characterized by depictions of people as clouds of dots and wireframes representing human figures moving in time.

A group of hackers released an Open Source device driver that allowed programmers to access the Kinect's data on a personal computer. Enabled by this capability, we soldered together an inverter and motorcycle batteries to run a laptop and a Kinect sensor on the go. We attached a Canon 5D DSLR to the sensor and plugged it into the laptop. The entire kit went into a backpack.

We spent an evening in the New York Union Square subway capturing pedestrians. The images we generated depict fragments of candid portraits placed into 3-dimensional space. They use depth data captured from the Kinect's hacked drivers combined with digital photographs through custom software.

These prints are selected renderings from this process.

-

Enter5: Datapolis, Prague, Czech Republic

Eyebeam, NYC | Wired Frames

Brooklyn Photo, NYC | Resolve

The Active Space, Brooklyn, New York | Vegan Pizza Party

-

Creative Applications – Kinect NYC Subway [openFrameworks, Kinect]

The Verge – You are being watched: making art from tracking technology

Today in Art – Digital Artwork

Academic Paper – The Return of Technology as the Other in Visual Practices